BayesConformPred

Leveraging Conformal Prediction with estimated Uncertainty in Deep Learning for disease detection

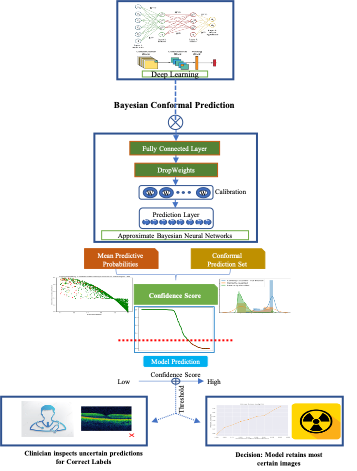

The Eye2Gene team have already developed an algorithm capable of predicting which gene is causing an IRD using a standard retinal scan, currently to an accuracy of up to 80% [1]. The algorithm has analysed thousands of images and learnt to recognise patterns that link an individual image to specific gene mutations. Deep learning (DL) involves powerful black box predictors and has recently outperformed human experts in several medical diagnostic problems. However, these methods focus exclusively on improving the accuracy of point predictions without assessing their outputs’ quality and ignore the asymmetric cost involved in different types of misclassification errors. Neural networks also do not deliver confidence in predictions and suffer from over and under confidence, i.e. are not well calibrated. Knowing how much confidence there is in a prediction is essential for gaining clinicians’ trust in the technology.

Bayesian Neural Networks (BNN) provide a natural and principled way of modelling uncertainty, robust to over-fitting (i.e. regularisation). However, exact Bayesian inference is computationally intractable and relies on many unverifiable assumptions to provide coverage. The Conformal Prediction (CP) framework assesses the uncertainty of predictions in machine learning models that automatically guarantee a maximum error rate under the assumption of exchangeability. Furthermore, conformal prediction outputs a prediction set that contains the true label with at least a probability specified by the practitioner, leading to improved generalisation and calibrated predictive distributions.

Aims

This project aims to develop novel conformal prediction with estimated uncertainty in deep learning methodologies and a software toolkit for IRD-based disease detection. The proposed method will be achieved through three concrete and actionable research tasks:- data uncertainty in IRD imaging

- model uncertainty quantification with conformal predictive set; and

- clinician-in-the-loop AI: conformed prediction set with uncertainty-informed decision referral to improve diagnostic performance Thus, human validation, intervention, and more extensive tests can be carried out in identified high-uncertainty cases to avoid potential errors. The resulting software toolkit will be validated through an AI-assisted IRD disease detection application and is transferable across a wide range of diagnostic applications.

References:

- Nikolas Pontikos, William Woof, Advaith Veturi et al. Eye2Gene: prediction of causal inherited retinal disease gene from multimodal imaging using deep-learning, 25 October 2022, PREPRINT (Version 1) available at Research Square https://doi.org/10.21203/rs.3.rs-2110140/v1

- Angelopoulos, Anastasios N., and Stephen Bates. "A gentle introduction to conformal prediction and distribution-free uncertainty quantification." arXiv preprint arXiv:2107.07511 (2021).

- Ghoshal, Biraja, Bhargab Ghoshal, and Allan Tucker. "Leveraging Uncertainty in Deep Learning for Pancreatic Adenocarcinoma Grading." In Annual Conference on Medical Image Understanding and Analysis, pp. 565-577. Springer, Cham, 2022.

- Ghoshal, Biraja, Allan Tucker, Bal Sanghera, and Wai Lup Wong. "Estimating uncertainty in deep learning for reporting confidence to clinicians in medical image segmentation and diseases detection." Computational Intelligence 37, no. 2 (2021): 701-734.